Compliant Innovation - Creating a Culture of Privacy in a Tech-Driven Environment

Mark Zuckerberg is famous for saying that innovators needed to “move fast and break things” in order to be successful. The implicit notion is that speed to scale is of utmost importance so that by the times things go wrong, the company is too big to fail. Given his experience with Facebook, now Meta, it’s hard to argue he was wrong. Indeed, this concept of growth above all else held true for much of the first two decades of the 21st century as we saw the “unicorns” like Google, Facebook, Uber and Netflix all race to multi-dollar valuations. However, over the past few years, we have begun to see regulators, journalists and the consumer finally catching up to the false promise of “better living through technology” and demand more from these companies than user counts.

Accordingly, today’s start-ups do not have the luxury of operating in a question-free environment, where the public would take them at the words found in their ad copy or pitch deck. Enough dollars and trust have been burned by the likes of Theranos, WeWork and countless other lesser-known busts resulting in start-ups being scrutinized much more carefully, with the health of the business and profile of the executive team receiving as much as if not more attention than than the number of downloads of a minimally viable app.

Furthermore, regulators are finally catching up to the rest of the world with regard to privacy. Previously viewed as a contractual right to sign away, U.S. lawmakers are beginning to treat privacy as more of a fundamental human right as is the case in Europe, Asia and elsewhere. Understanding the significance of the grossly imbalanced negotiating power between Apple and its millions of customers who readily click “I accept” on anything put in front of them, we are starting to see a shift in laws to imbue consumers with a stronger bargaining position vis-a-vis the technology providers. Given this sea change, it is imperative that companies be prepared for new ways of treating personal and sensitive information, but often the question is where to start.

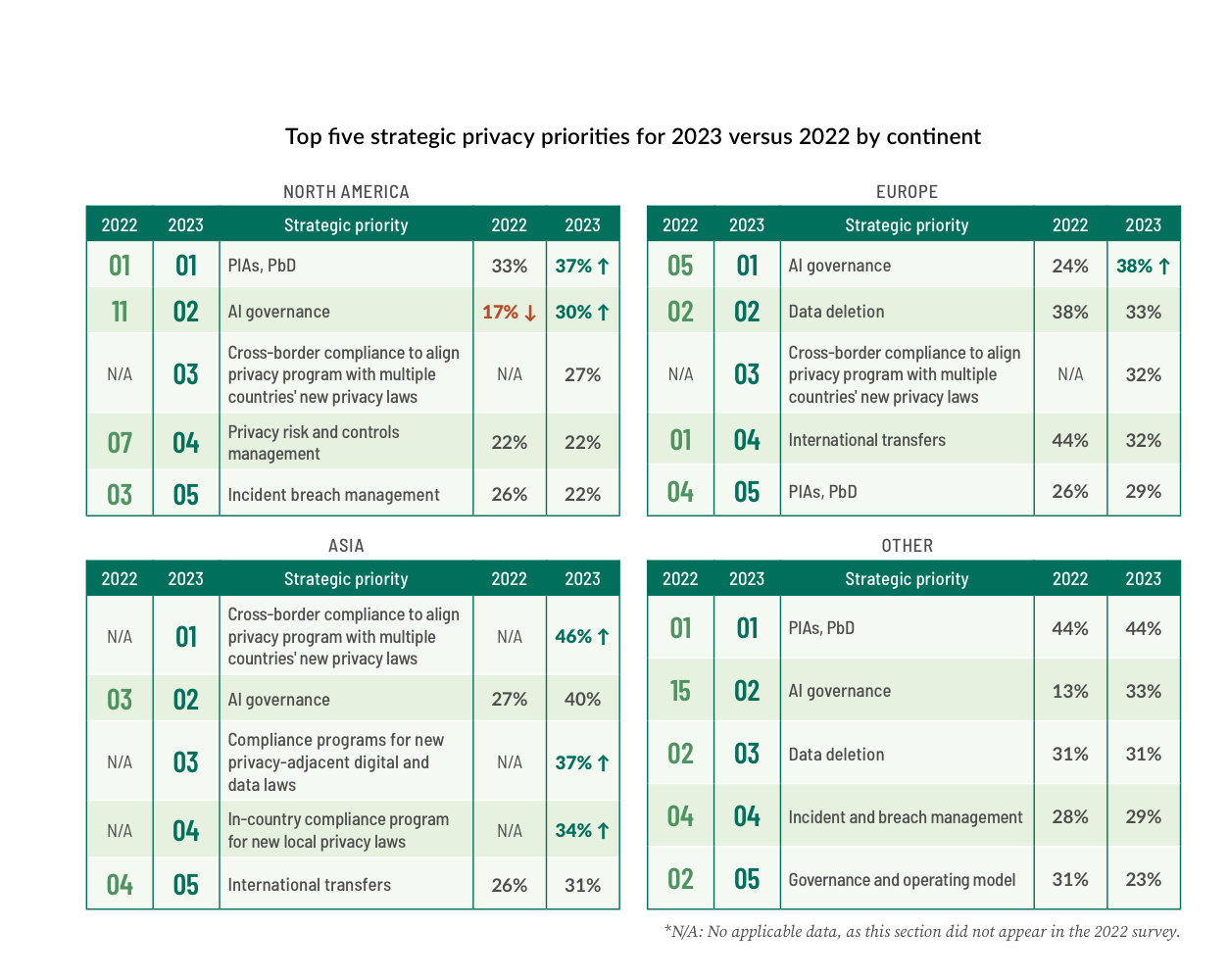

As is said, the easiest place to start is at the beginning, and in software development, this often means with the code itself. By shifting the process “to the left,” software developers can proactively build in privacy safeguards around data access, storage and sharing and prevent costly fixes down the road due to either bugs, breaches or regulatory shifts. This overall concept is known as “Privacy by Design” (PbD), and is rapidly becoming a top strategic priority at companies worldwide.

We will go into the details of what PbD is and how it can be implemented into your software development lifecycle in future articles, but it is important to stress now that the earlier you build in data classification, governance and deletion functions into your code, the fewer headaches you will have later when something goes awry. It is no longer sufficient to link a privacy policy and put up a cookie consent module for visitors to your website. And it is also not purely a technology solution; there is a need for strong cross-functional collaboration between engineers and privacy professionals who work as a team to manage the inputs and outputs of the program. Ultimately, the goal is to build trust among users, by being transparent about your use of their data, and when you do, the outcome is a more engaged user base who is willing to share more data with you.

In our next article, we will explore how software development has evolved from using a linear waterfall process to agile methodologies and discuss how this evolution presents both challenges and opportunities in proactively designing privacy capabilities and safeguards in our products.